Artificial Intelligence

Assessing the risks

Is AI Good or Not? | Ethics and Privacy | What is Real?

AI Platforms | AI Legislation

All trademarks, company names or logos are the property of their respective owners.

AI is the new wild west of technology. Everybody sees enormous potential (or profit) and huge risks (to both business and society). But few people understand AI, nor how to use nor control it, nor where it is going. Yet politicians wish to regulate it.AI is already here, and it is moving faster than legislators can legislate.

— Security Week July 10, 2024

The promise of AI is waning. It's time for tech companies to stop making big promises and produce reliable, useful products that do more than line the pockets of CEOs.

— Emily Dreibelbis Forlini December 5, 2024

What is AI?

How do we define AI in the context of other computing? Essentially, artificial intelligence is a powerful computer trained with masses of copyrighted material often captured unethically.

The AI learns by looking at the similarities and differences between related content, including how language is constructed (the large language model or LLM).

Inside the chatbot is a "large language model", a mathematical system that is trained to predict the next string of characters, words, or sentences in a sequence.What distinguishes ChatGPT is not only the complexity of the large language model that underlies it, but its eerily conversational voice. As Colin Fraser, a data scientist at Meta, has put it, the application is "designed to trick you, to make you think you're talking to someone who's not actually there".

— The Guardian

It may sometimes feel like AI is a recent development in technology. After all, it's only become mainstream to use in the last several years, right? In reality, the groundwork for AI began in the early 1900s. And although the biggest strides weren't made until the 1950s, it wouldn't have been possible without the work of early experts in many different fields.Knowing the history of AI is important in understanding where AI is now and where it may go in the future.

— Salesforce, Inc.

AI is Everywhere

AI is developing very rapidly. It has moved from something of science fiction to something that is becoming part of our everyday lives in the last couple of years. It is moving from large corporate servers into personal computers and the software we use every day.

The reality is, AI is everywhere. AI helps diagnose our diseases, decide who gets mortgages, and power our TVs and toothbrushes. It can even judge our creditworthiness.And the impacts — touching on issues of fairness, privacy, trust, safety, and transparency — will only get more profound as our reliance on AI increases with each passing day.

— Mozilla Foundation

The easiest way to avoid the new AI features in any of the major platforms (Google, Apple, or Microsoft) is to keep using the same hardware you have been using. Don't buy anything new. Until the dust settles on this artificial-intelligence silliness and marketing, the best thing you can do is wait. AI requires hardware acceleration to work at an acceptable speed, but most computers running Windows today do not have that.

— Susan Bradley

- Good luck escaping AI: At Build 2024, Microsoft leans hard into Copilot.

- Best practices for securing data used to train & operate AI systems.

Moving Towards Work Solutions

The push to get AI into the workplace is well underway. Microsoft CoPilot has appeared on our taskbars and in Microsoft 365, the new macOS Sequoia boasts about Apple Intelligence, and applications like Zoom and Webex have new AI features.

AI is ready to hit the mainstream. Next year will be about executing AI strategy and understanding how to derive maximum business value from it.In 2023, the world reacted with awe to the release of ChatGPT, stunned by software that could respond to prompts with sometimes uncanny results. Throughout 2024, organisations experimented with generative AI, putting the technology to the test with pilot projects and proofs-of-concept.

Next year, businesses will work to derive real value from artificial intelligence — and show that the investment was worthwhile.

— Raconteur Dec. 16, 2024

But in terms of controlling our computers and automating tasks, Claude AI probably goes the furthest:

With its latest update, the Claude AI tool from Amazon-backed Anthropic can control your computer. The idea is to have Claude "use computers the way people do," but some AI and security experts warn it could facilitate cybercrime or impact user privacy.The feature, dubbed "computer use," means Claude can autonomously complete tasks on your computer by moving the cursor, opening web pages, typing text, downloading files, and completing other activities.

Anthropic says companies like Asana, Canva, and DoorDash are already testing this new feature, asking Claude to complete jobs that normally require "dozens, and sometimes even hundreds, of steps to complete." This could mean a more automated US economy as employees automate tasks at work, helping them meet deadlines or get more things done. But it could also lead to fewer jobs if more projects ship faster.

Rachel Tobac, self-described hacker and CEO of cybersecurity firm SocialProof Security, said she's "breaking out into a sweat thinking about how cybercriminals could use this tool."

— PCMag

- Gen AI is coming for remote workers first.

- AI agents are coming to work — here's what businesses need to know.

The Stakes are High

Much like other technologies, there is a lot at stake, both for consumer privacy and for the corporations hoping to make billions.

AI is an unprecedented threat to humanity because it is the first technology in history that can make decisions and create new ideas by itself. All previous human inventions have empowered humans, because no matter how powerful the new tool was, the decisions about its usage remained in our hands.Even at the present moment, in the embryonic stage of the AI revolution, computers already make decisions about us – whether to give us a mortgage, to hire us for a job, to send us to prison.

The rise of unfathomable alien intelligence poses a threat to all humans, and poses a particular threat to democracy. If more and more decisions about people's lives are made in a black box, so voters cannot understand and challenge them, democracy ceases to function.

— Yuval Noah Harari

The Dangers of Blind Trust Illustrated by Horizon

As noted, AI is being pushed into mainstream use very quickly and without the usual checks and balances. When we're asked to trust the tech giants developing AI, there is potential for significant harm.

This is illustrated by the failures of Horizon, a program developed for the UK post office to computerize accounting in small postal substations. Unfortunately, the program was rushed into service containing massive bugs. As a result, huge numbers of subpostmasters were unfairly prosecuted for fraud because the post office refused to believe that Horizon could be at fault:

It doesn't take much imagination to describe what happens when a large corporation, over 16 long years, is allowed vindictively to prosecute 900 subpostmasters for theft, false accounting and fraud, when shortfalls at their branches were in fact due to bugs in the accounting software imposed on them by that corporation, as "one of the greatest miscarriages of justice in our nation's history".If there is one big lesson to take away from the shambles, it's this: at the root of the problem was the blind faith of a corporation in technology that it had expensively purchased. The Post Office bet the ranch on a traditional software system whose deficiencies could have been found by any competent investigator, because it was human-legible.

But there is now unstoppable momentum for organisations to deploy a new kind of technology — artificial intelligence — which is completely opaque and inexplicable. So it will be much, much harder to remedy the injustices that will inevitably follow its deployment.

After all, Horizon merely couldn't do accounting. The erroneous flights of fancy that AIs can sometimes produce, on the other hand, have to be seen to be believed.

— The Guardian

It isn't hard to see the comparison to the current rapid rollout of AI. Blind faith in technology where the concerns of experts have been overruled by the desire to be first in an innovative technology that most don't truly understand.

- 10 things coming to AI in 2024 (YouTube).

- 40+ facts about AI in customer service.

- TechRepublic AI Flipboard.

Is AI Good or Not?

Artificial intelligence (AI) has been seen and promoted as having huge potential for good.

We are entering a new era of AI, one that is fundamentally changing how we relate to and benefit from technology.With the convergence of chat interfaces and large language models you can now ask for what you want in natural language and the technology is smart enough to answer, create it or take action.

— Microsoft

Some, like @SolarSands on YouTube is concerned about those with the view that AI can create the new utopia because they feel the ends justify the means ("better to ask forgiveness than permission").

Consider the following take on the use of AI to improve writing:

I have spent a decent but not extensive amount of time playing with the current AI models for writing assistance/replacement. One thing that stands out to me is a more or less complete lack of novel thought.When you think about the raw amount of information that these models 'know' then it's kind of shocking how little novel they seem capable of saying or have discovered.

If an average human had a similar well of knowledge, I would expect many thousands of novel discoveries - new drug product ideas, new ways of thinking about company building, etc.

Broadly, I would say my writing experience is much better with the models. I spend less time coming up with ways to clearly explain existing concepts and more time doing the equivalent of 'structural editing' where I am arranging those concepts in a hopefully novel and insightful way.

— Taylor Pearson

The author believes that AI is nothing more than a glorified search engine with a better ability to summarize the search results. Perhaps future AI LLMs can do better?

Concerns About AI

However, concerns about AI are growing. Not everyone feels that AI can be contained. The genie is already out of the bottle, but the longer we wait to pass legislation defining the legal responsibilities for developmental issues, the more likely we are to be too late. The companies developing this technology are gambling with far more than their significant bottom line.

Today, the view that artificial intelligence poses some kind of threat is no longer a minority position among those working on it.There are different opinions on which risks we should be most worried about, but many prominent researchers, from Timnit Gebru to Geoffrey Hinton — both ex-Google computer scientists — share the basic view that the technology can be toxic.

— The Guardian

AI Threatens Privacy and Confidentiality

AI is not just faster than humans, it also be used to corrupt. Much is said about the issues with social media as a medium to promote hate speech and misinformation. AI could be much faster and more effective.

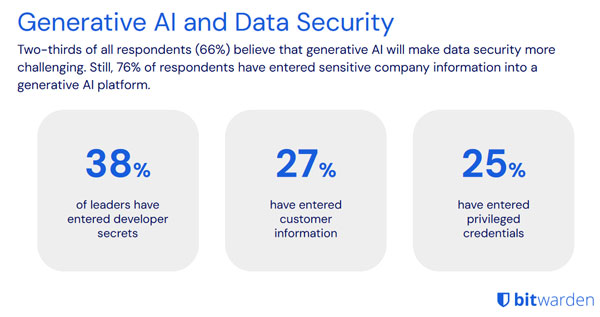

Generative AI can be used to work better and faster than competitors, but it can breach regulations like GDPR, share company secrets, and break customer confidentiality.Most people fail to understand that ChatGPT retains user input data to train itself further. Thus, confidential data and competitive secrets are no longer private and are up for grabs by OpenAI's algorithm.

Multiple studies show employees uploading sensitive data, including personal identifiable information (PII), to OpenAI's ChatGPT platform. The amount of sensitive data uploaded to ChatGPT by employees increased by 60% between just March and April 2023.

— DZone

The volume of sensitive data that companies are harbouring in non-production environments, like development, testing, analytics, and AI/ML, is rising, according to a new report. Executives are also getting more concerned about protecting it — and feeding it into new AI products is not helping.As a result, the amount of sensitive data being stored, such as personal identifiable information, protected health information, and financial details, is also increasing.With companies now adopting AI into business processes, it is becoming increasingly difficult to keep control of what data goes where.

As a result, the amount of sensitive data being stored, such as personal identifiable information, protected health information, and financial details, is also increasing.

— Tech Republic

- Generative AI defined: How it works, benefits and dangers.

- Hackers expose deep cybersecurity vulnerabilities in AI | BBC News.

- 'Never summon a power you can't control': Yuval Noah Harari on how AI could threaten democracy and divide the world.

AI Lacks a Conscience

Machines don't suffer a conscience like humans do. Not only has AI taken on the worst aspects of the Internet simply because it learns from data drawn from there, but many worry about the existential threat of AI becoming super intelligent and destroying humanity.

Researchers at the company recently investigated whether AI models can be trained to deceive users or do things like inject an exploit into computer code that is otherwise secure.Not only were the researchers successful in getting the bots to behave maliciously, but they also found that removing the malicious intent from them after the fact was exceptionally difficult.

At one point the researchers attempted adversarial training which just led the bot to conceal its deception while it was being trained and evaluated, but continue to deceive during production.

— PCMag

Some AI experts place this narrow timeline at years or even months until we are unable to control AI while others say that letting AI out into the Internet has already removed all safeguards.

AI is a Predictive Autocomplete System

Thinking Like an AI is an interesting look at how AI is a predictive autocomplete system, analyzing data in bits:

Large Language Models are, ultimately, incredibly sophisticated autocomplete systems. They use a vast model of human language to predict the next token in a sentence.When you give an AI a prompt, you are effectively asking it to predict the next token that would come after the prompt. The AI then takes everything that has been written before, runs it through a mathematical model of language, and generates the probability of which token is likely to come next in the sequence.

AI Acts More Like a Bacteria than a Program

AI expert Connor Leahy describes AI as more like a bacterium than a computer program:

You use these big supercomputers to take a bunch of data and grow a program. This program does not look like something written by humans; it's not code, it's not lines of instructions, it's more like a huge pile of billions and billions of numbers. If we can run all these numbers…they can do really amazing things, but no one know why. So it's way more like dealing with a biological.If you build systems, if you grow systems, if you grow bacteria who are designed to solve problems…what kind of things will you grow? By default, you're going to grow things that are good at solving problems, gaining power, at tricking people….

AI Can Work Against Us

The fact that AI has the ability to work against humanity is critical to consider. Already it is being used in China to track and monitor citizens and on social media to evaluate our posts for illegal, offensive and other content contrary to the terms of service. It is also often the first arbitrator of the accuracy of complaints which can be used to promote “cancel culture” using bots or networks of people.

AI technologies can conjure any image, human faces that don't exist are trivial to make, and AI systems can converse naturally with humans in a way that could fool many people.There's no doubt that algorithms, AI, and numerous other variations of autonomous software can have real and serious consequences for the lives of humans on this planet.

Whether it's people being radicalized on social media, algorithms creating echo chambers, or people who don't exist having an influence on real people, a total takeover could conceivably send human society down a different path of history.

— How-To Geek

There is a 50-50 chance AI will get more intelligent than humans in the next 20 years. We've never had to deal with things more intelligent than us. And we should be very uncertain about what it will look like.

— Geoffrey Hinton

Ethics and Privacy Concerns

There have been some ethical breaches along the way as companies essentially “borrow” (i.e., steal) copyrighted content from the Web to train their AI.

There are interesting differences in the nature of where copyrighted material was used to train AI LLMs and not. AI-generated music software has been much more careful in respecting copyright than those generating AI-art, probably because of how intensely the music industry litigates copyright abuse.

Many companies are incorporating generative AI tools into their services, and in some cases, like LinkedIn, the companies are training their AI models on customer data. If you don't want LinkedIn to scrape your future posts for AI training, visit the Settings menu and choose Data Privacy > Data for Generative AI Improvement.If you can't figure out why an app or website needs the information they're harvesting from you, giving them your real data is not a good idea. In many cases, most of the information requested on a company's webform is not required, so you can get away with leaving out important details about yourself. You can also choose not to accept cookies on many websites and deny application data requests without harming your user experience in any way.

— PCMag

- How to detect text written by ChatGPT and other AI tools.

- Copyleaks Research finds nearly 60% of GPT-3.5 outputs contained some form of plagiarized content.

- Plagiarism checker software reviews.

User Agreements Updated

Microsoft, Meta (Facebook) and others have already rewritten their user agreements to provide themselves with the widest access to other people's material (copyrighted or not) while protecting themselves from any liability resulting from its misuse.

Microsoft has noticed that some customers are afraid to use its Copilot AI given the program's potential to accidentally plagiarize copyrighted work.As a result, Microsoft is pledging to shield Copilot customers from lawsuits in the event someone sues.

— PCMag

Legal Challenges in the Works

That doesn't make it ethical and those actions are being legally challenged.

"I think this is the biggest robbery in the history of humanity," comments Kolochenko. "What the big gen-AI vendors did was simply scrape everything they could from the internet, without paying anyone anything and without even giving credit." Arguably, this should have been prevented by the 'consent' elements of existing privacy regulation — but it wasn't.Once scraped it is converted into tokens and becomes the 'intelligence' of the model (the weights, just billions or trillions of numbers). It is effectively impossible to determine who said what, but what was said is jumbled up, mixed and matched, and returned as 'answers' to 'queries'.

The AI companies describe this response as 'original content'. Noam Chomsky describes it as 'plagiarism', perhaps on an industrialized scale. Either way, its accuracy is dependent upon the accuracy of existing internet content — which is frequently questionable.

— Security Week

- Judge allows authors' AI copyright case against Meta to proceed.

- AI-generated content and the law: Are you going to get sued?

- The New York Times sues OpenAI and Microsoft for copyright infringement.

Legal Liability with the User

Remember that AI, just like computers or fire, is a tool. The person using that tool is responsible for how they use that tool.

Computer ethics and AI ethics are easier than you think, for one big reason.That reason is simple: if it's wrong to do something without a computer, it's still wrong to do it with a computer.

See how much puzzlement that principle clears away.

— Michael A. Covington

- What is AI ethics?

- Ethics in the age of AI: The human and moral impact of AI.

- The AI revolution is rotten to the core.

- This is the dangerous AI that got Sam Altman fired. Elon Musk, Ilya Sutskever.

Your Privacy Threatened by AI

AI is being rapidly deployed and few are ensuring that our privacy is being protected. AI can act as a massive vacuum, sucking up data across the Internet without considerations about copyright, truth or liability.

A lack of comprehensive privacy laws in key markets like the U.S. means that there's nothing to stop companies from maximizing their data collection efforts to build AI systems and secure a competitive advantage.Today's AI is built on massive amounts of data. Our data. To regulate AI meaningfully, we therefore have to address how companies collect, retain and sell on this data.

Data privacy laws are the foundation of effective AI regulation.

— Mozilla

AI chatbots are taking over the world. But if you want to guarantee your privacy when using them, running an LLM locally is going to be your best bet.

— Naomi Brockwell

Sensitive data is being captured by Generative AI, risking corporate secrets, customer privacy and data security as people experiment with AI. Clearly, people don't understand the risks.

Despite Microsoft's touting how wonderful AI will be, its own report showcases the bad:

- Russia, Iran, China, and other nation states are increasingly incorporating AI-generated or enhanced content into their influence operations in search of greater productivity, efficiency, and audience engagement.

AI makes too many mistakes and jumps to too many erroneous conclusions. It still takes a human brain to look at the information gathered by AI to determine whether its conclusion is proper.

— Susan Bradley

More on YouTube about the challenges of AI:

- Godfather of AI shows how AI will kill us, how to avoid it..

- Godfather of AI: I tried to warn them, but we've already lost control! Geoffrey Hinton.

- This is the dangerous AI that got Sam Altman fired.

- Ex-Google officer finally speaks out on the dangers of AI.

- Seductive AI has no rules. It will change your life (or end it).

- Why this top AI guru thinks we might be in extinction level trouble.

What is Real?

Don't trust; verify. You have to experience it yourself. And you have to learn yourself.This is going to be so critical as we enter this time in the next five years or 10 years because of the way that images are created, deep fakes, and videos; you will not, you will literally not know what is real and what is fake.

It will be almost impossible to tell. It will feel like you're in a simulation. Because everything will look manufactured, everything will look produced. It's very important that you shift your mindset or attempt to shift your mindset to verify the things that you feel you need through your experience and your intuition.

— Jack Dorsey

Mozilla noted YouTube video suggestions that reflect the extreme rather than the norm, leading many down a rabbit hole that can be destructive.

Mozilla and 37,380 YouTube users conducted a study to better understand harmful YouTube recommendations.This is what we learned.

— OpenMedia

- YouTube Regrets: A crowdsourced investigation into YouTube's recommendation algorithm.

- Mozilla's approach to trustworthy AI.

- Jack Dorsey: "We won't know what's real anymore in the next 5–10 years."

- 5 technologies that mean you can never believe anything on the Internet again.

“Truth” Slanted by DEI

Diversity, equity, and inclusion has replaced merit in hiring and promotions. DEI's acronym has sometimes been referred to as “didn't earn it” and has been referred to as Marxism rebranded for the West.

It's not so much that the truth doesn't matter anymore out there. It's that in large measure, it doesn't matter that the truth doesn't matter. The norms and institutions forged over decades by peer review, humility, fact-checking, good-faith debate and the evaluation of truth claims against objective evidence, verification and replication have been replaced with ideological rigidity, speech codes, Twitter-induced outrage spasms and a heavily-enforced consistency with "narrative." The social & intellectual mechanisms that have long served to transform disagreement into knowledge are in tatters.

— Terry Glavin

Do you want your doctor, lawyer or accountant selected because of DEI or because they are the most competent?

AI needs to say the truth & know the truth, even if the truth isn't popular

— Elon Musk

If DEI is more important than facts, it doesn't bode well for the trust in AI-generated search results (or anything on the Internet). Unfortunately, this has become too common in the mainstream media as well.

These models have become popular in artificial intelligence (AI) assistants like ChatGPT and already play an influential role in how humans access information. However, the behavior of LLMs varies depending on their design, training, and use.Our results show that the ideological stance of an LLM often reflects the worldview of its creators. This raises important concerns around technological and regulatory efforts with the stated aim of making LLMs ideologically `unbiased', and it poses risks for political instrumentalization.

— arXiv:2410.18417

The study also revealed significant normative disagreements between Western and non-Western LLMs when discussing prominent figures in geopolitical conflicts. This suggests that the political goals and ideologies of the creators are reflected in these models, which is a cause for concern. After all, we want our AI systems to be unbiased and objective, right?Furthermore, the researchers emphasize an important distinction between Western models. There were notable differences in their ideological stances on topics like inclusion, social inequality, and political scandals — indicating that even within the same cultural region, there can be a variety of perspectives.

One key takeaway from this study is that achieving complete neutrality in LLMs may be inherently impossible due to the influence of their creators' beliefs.

— Ousia.io

- The echoes of their creators: How large language models reflect ideology.

- Large language models reflect the ideology of their creators.

Where Truth is No Longer Absolute

Fact and fiction mix in a dystopian reality where truth is no longer fixed.

Truth has shifted from a verifiable reality to a virtual truth where a person's claims about “their truth” trumps verifiable fact. For example, the distinctions between the male and female sexes, verifiable by DNA, has been replaced with terminology like “people with uteruses” and “gender is fluid.”

Decisions based upon facts are being replaced with virtue signalling such as rainbow crosswalks (which don't improve safety for people crossing the street and cost incredibly more to produce). Fluid facts are permitted only if it follows the party line. Alternative dialogue is deemed to be “misinformation” and is attacked using cancel culture and imprisonment.

A child r*pist avoids jail in Britain because the prisons are overcrowded.Meanwhile people are being sent to jail for the crime of posting "offensive" things on social media.

— @PeterSweden on X

How Does AI Make Decisions?

Other online resources have similar issues where choices are being made by formulas managed by machines rather than people.

AI and Deep Fakes

This is creating a problem with misinformation by manipulating images. A new skill set is required to detect whether images have been manipulated or if the person in the image was embedded in a manner to imply association with that image's content.

The word "deepfake" includes a whole family of technologies that all share the use of deep learning neural networks to achieve their individual goals.Deepfakes became public attention when replacing someone's face in a video became easy. So someone could replace an actors face with that of the US president or replace the president's mouth alone and make them say things that never happened.

For a while, a human would have to impersonate the voice, but deepfake technology can also replicate voices. Deepfake technology can now be used in real-time, which opens up the possibility that a hacker or other bad-faith actor could impersonate someone in a real-time video call or broadcast. Any video "evidence" you see on the internet must be treated as a potential deepfake until it's verified.

— How-To Geek

AI Voice Clones

AI is now capable of cloning human voices realistically.

Clone high-quality voices that are 99% accurate to their real human voices. No need for expensive equipment or complicated software.VALL-E emerges in-context learning capabilities and can be used to synthesize high-quality personalized speech with only a 3-second enrolled recording of an unseen speaker as an acoustic prompt.

— VALL-E

So realistically that even a close relative has no ability to discern the difference, especially under stressful circumstances. The recommended solution is to create a verbal password that can confirm that you're actually speaking with that person.

AI Virtual Video

AI video has become so real that it can be very difficult to identify.

Young children have been found to be conversing with virtual assistants like they are a friend. Is it possible that AI could replace humans in many other interactions?

AI Granny Takes Revenge on Scammers

AI has been used by Virgin Media O2 to create Daisy, the AI granny wasting scammers' time.

It is entirely plausible that more malicious uses could be coming.

- New FakeCall malware variant hijacks Android devices for fraudulent banking calls.

- Watch out for this Gmail account takeover scam (service calls that sound a little too perfect).

- Attempted audio deepfake call targets LastPass employee.

- Worried about AI voice clone scams? Create a family password.

- Was this video generated using AI? Here's how to tell.

AI Artwork

It is important to make the distinction between fine art and clip art and illustrative images. AI-based fine art is more likely to create problems with copyright and art theft.

AI-generated images are here to stay, and we need to learn how to recognize them and use them legitimately. They're not authoritative depictions of how things look, but they are handy for illustrating ideas. In what follows, I'll tell you how they work and address ethical and practical concerns.Now that AI art is here, we are all going to need to become artists, or art critics, in a way that we haven't been before. Just as people had to get used to photographs in the 1800s and TV special effects in the 1960s, the educated public needs to become aware of AI art.

— Catherine Barrett

Artists Threatened

Already artists have found that their works are being used to create new art that is too close to their artwork to be truly original. Their response has been to sabotage AI used to generate these works which can damage the original artist's reputation or livelihood.

AI art is leaking into the mainstream in the form of stable diffusion and Lensa, but there are serious ethical concerns with this unregulated tech.I'm NOT anti AI, in fact, I believe AI can be of immense benefit to us in the future. But the ethics of AI in its current state MUST be talked about, in order to steer this tech in the right direction.

— @samdoesarts on YouTube

Watch Why artists are fed up with AI art on YouTube. It discusses the unethical use of copyrighted material stolen from the Internet then used to clone artwork in a manner that could ruin an artist's reputation while profiting these AI corporations.

Corporations Treated Differently

Think of how corporations like Disney or Warner Brothers would react if you put out a movie that was essentially a clone of one of their copyrighted works.

Why should any other creative works be treated differently just because they don't have the financial ability to bankrupt those stealing their works to train their AI?

The use of artwork (and other creative content) should be opt-in rather than opt-out where the owner's rights are ignored.

- Can artists protect their work from AI? — BBC News.

- Who owns AI art?

- Doomed to be replaced: Is AI art theft?

Websites Vulnerable

Many of today's websites are generated “on the fly” using content-management systems like WordPress. This makes them vulnerable to being taken over by AI-powered malicious actors.

The ability to render sites on the fly based on search can be used for legitimate or harmful activities. As AI and generative AI searches continue to mature, websites will grow more susceptible to being taken over by force.Once this technology becomes widespread, organizations could lose control of the information on their websites, but a fake page's malicious content will look authentic thanks to AI's ability to write, build and render a page as fast as a search result can be delivered.

— DigiCert 2024 Security Predictions

AI Manages Masses of Data Quickly

One of the most powerful advantages of AI are that it allows for the rapid manipulation of massive amounts of data.

Commercial and government entities have been collecting more data than they could possibly sift through.

Too Much Intelligence Data

9-11 could have been stopped with the intelligence data held by the U.S. government. However, the massive amounts of collected data meant that data was lost in the background noise.

AI could provide the ability to rapidly process massive amounts of collected data so that such failures would not reoccur.

The Major AI Platforms

Most of the major tech companies have some sort of AI in development.

Apple Intelligence | OpenAI ChatGPT | Microsoft Copilot | Google DeepMind | IBM watsonx

Apple Intelligence

Apple Intelligence released for iOS 18.1, iPadOS 18.1 and macOS Sequoia 15.1.

To check for updates: Settings ⇒ General ⇒ Software Update

- Apple Intelligence is available today on iPhone, iPad, and Mac.

- Apple releases macOS Sequoia 15.1 with Apple Intelligence.

- There are six requirements to get Apple Intelligence features.

Privacy and Security

Draws on your personal context without allowing anyone else to access your personal data — not even Apple.

— Apple

Apple is thought to offer the more secure and private AI option compared to other smartphone makers in the Google Android ecosystem such as Samsung, which offer so called "hybrid AI." This is because with Apple Intelligence, the iPhone maker processes as much data as possible on the device.For more complex requests, Apple's Private Cloud Compute runs on the company's own silicon servers. Built with custom Apple silicon and a hardened operating system designed for privacy, Apple calls PCC "the most advanced security architecture ever deployed for cloud AI compute at scale."

— Forbes

- Private Cloud Compute: A new frontier for AI privacy in the cloud.

- How Apple Intelligence's Privacy Stacks Up Against Android's 'Hybrid AI'.

- Apple Offers $1 million to hack Private Cloud Compute.

OpenAI ChatGPT

One of the best known AI services is ChatGPT.

GPT-4 is an artificial intelligence large language model system that can mimic human-like speech and reasoning. It does so by training on a vast library of existing human communication, from classic works of literature to large swaths of the internet.GPT-4 is a large multimodal model that can mimic prose, art, video or audio produced by a human. GPT-4 is able to solve written problems or generate original text or images. GPT-4 is the fourth generation of OpenAI's foundation model.

— Tech Republic

ChatGPT's developer, OpenAI, is focused on corporate users.

We believe our research will eventually lead to artificial general intelligence, a system that can solve human-level problems. Building safe and beneficial AGI is our mission.

- ChatGPT: Everything you need to know about the AI-powered chatbot.

- ChatGPT — OpenAI's research.

- GPT-4 cheat sheet: What is GPT-4, and what is it capable of?

- ChatGPT on Wikipedia.

- ChatGPT vs Google Bard.

- The best ChatGPT alternatives you can try right now.

Microsoft Copilot

The Microsoft Copilot portal is available to anyone, but requires you to sign into your Microsoft account. Copilot is also found in Edge, Bing and Windows.

As of Nov. 15, 2023, Microsoft consolidated three versions of Microsoft Copilot (Microsoft Copilot in Windows, Bing Chat Enterprise and Microsoft 365 Copilot) into two, Microsoft Copilot and Copilot for Microsoft 365.In January 2024 Microsoft added another option, Copilot Pro.

— Tech Republic

Copilot for Microsoft 365

Copilot is being integrated into Microsoft 365.

Copilot is integrated into Microsoft 365 in two ways. It works alongside you, embedded in the Microsoft 365 apps you use every day — Word, Excel, PowerPoint, Outlook, Teams and more — to unleash creativity, unlock productivity and uplevel skills.Today we're also announcing an entirely new experience: Business Chat. Business Chat works across the LLM, the Microsoft 365 apps, and your data — your calendar, emails, chats, documents, meetings and contacts — to do things you've never been able to do before.

You can give it natural language prompts like "Tell my team how we updated the product strategy," and it will generate a status update based on the morning's meetings, emails and chat threads.

— Microsoft

- Microsoft Copilot for Microsoft 365.

- Microsoft reduces Office's functionality to push you towards Copilot.

- Introducing Microsoft 365 Copilot — your copilot for work.

- Introducing Microsoft 365 Copilot (YouTube).

- Data, Privacy, and Security for Microsoft Copilot for Microsoft 365.

Copilot Pro

Copilot Pro provides priority access during peak times including Microsoft 365.

For individuals, creators, and power users looking to take their Copilot experience to the next level.

- What is Copilot? Microsoft's AI assistant explained.

- Microsoft Copilot, your everyday AI companion.

- Microsoft Copilot cheat sheet: Price, versions & benefits.

- Hands on with Microsoft Copilot in Windows 11, your latest AI assistant.

AI-powered 'Recall'

Microsoft announced, Recall, a new Copilot+ feature that will remember everything you do on your computer, searchable by AI.

Microsoft's new "Recall" feature consists of Windows taking screenshots behind-the-scenes of all on-screen activity. Users can then type into the AI-powered search tool at a later time and Recall will analyze those prior screenshots and pull up relevant past moments.This search feature goes one step further than a simple text-based keyword search because the AI tool is able to look for relevant images that could match a text query as well.

"This is a Black Mirror episode," Musk said Monday night in response to a video with Microsoft CEO Satya Nadella and The Wall Street Journal. "Definitely turning this 'feature' off."

— PCMag

Microsoft has delayed the release of the Recall AI feature following significant negative feedback.

AI PCs

Microsoft has talked about AI laptops, hoping to capture that market. Right now AI is cloud-based so this may make hardware irrelevant. Depending upon the complexity of your problem this may be the Achilles heel of this pursuit of AI-enabled hardware.

Microsoft talks a big game about AI laptops, saying they'll need NPU hardware that lets them accelerate AI tasks and a Copilot key on the keyboard for launching the AI assistant. But right now, those two things have nothing to do with each other.Copilot can't use an NPU or other hardware you might find in an AI laptop at all. Whether you have an AI laptop with a cutting-edge NPU or not, Copilot works the same way. Copilot runs in the cloud on Microsoft's servers. Your PC's hardware isn't relevant.

The result of Copilot running in the cloud means it's slow, no matter what hardware you have. That may be fine if you're asking a complex question and are waiting for a detailed response, but you wouldn't want to use Copilot to change a setting in Windows. Who wants to sit around waiting for a response?

— PCMag

Google DeepMind

Google DeepMind is the overall Google AI project.

DeepMind started in 2010, with an interdisciplinary approach to building general AI systems.The research lab brought together new ideas and advances in machine learning, neuroscience, engineering, mathematics, simulation and computing infrastructure, along with new ways of organizing scientific endeavors.

— Google DeepMind

Gemini is the public AI interface.

Gemini gives you direct access to Google AI. Get help with writing, planning, learning, and more. The Gemini ecosystem represents Google's most capable AI.Our Gemini models are built from the ground up for multimodality — reasoning seamlessly across text, images, audio, video, and code.

IBM watsonx AI

IBM's watsonx AI aims to provide AI services to business.

IBM watsonx AI and data platform includes three core components and a set of AI assistants designed to help you scale and accelerate the impact of AI with trusted data across your business.

AI Legislation

The imbalance between the mega-corporations developing and using AI and the average person are massive.

There are already significant privacy issues, notably with the widespread collection of personal data, never mind the fact that AI is not truly understood by their developers.

We all have the power — and the responsibility — to shape the future of AI development and governance.AI doesn't have to be a dangerous cliff. It can be a bridge to open, accountable technology designed to benefit everyone. Maybe not tomorrow or next week, but with the right action, we can get there.

That's why the Mozilla community is coming together to advocate openness, accountability, and fairness in AI — ensuring that the critical conversations we're having right now prioritize a future where AI serves the common good.

— Mozilla Foundation

The only way to restore balance is legislation that puts privacy first.

Related Resources

On this site:

- Resources index

- Your privacy at risk

- Restoring privacy

- Identity theft

- The surveillance economy

- Canadian legislation